Hardware and Software Development: The Essential Alliance for Technological Innovation

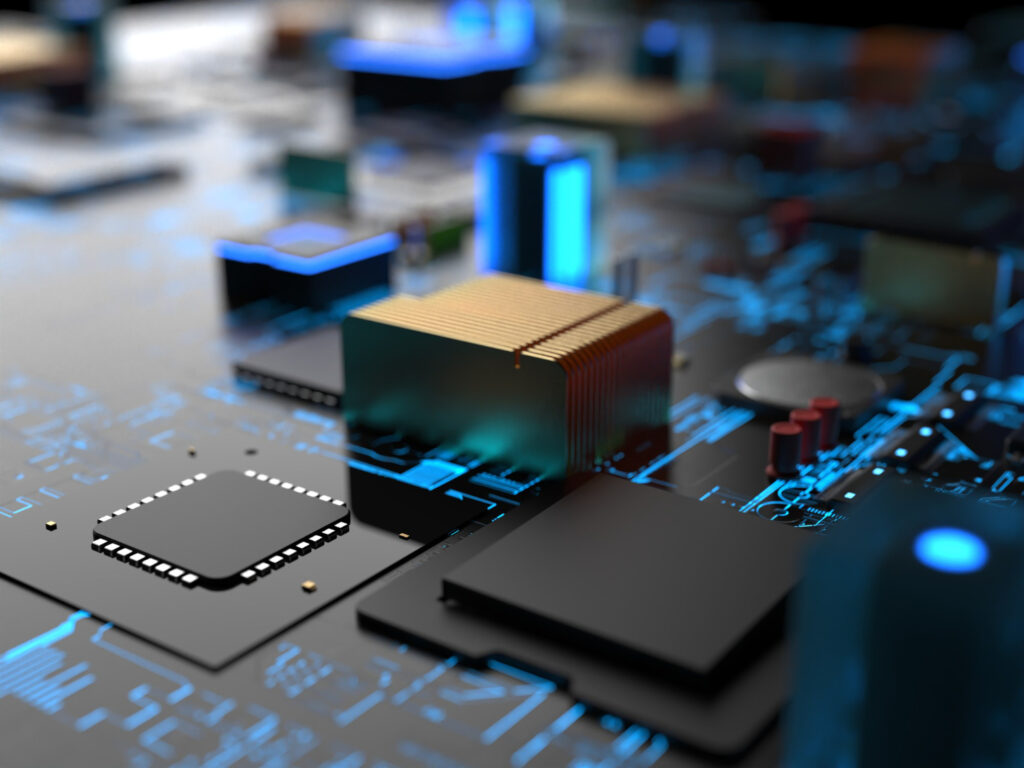

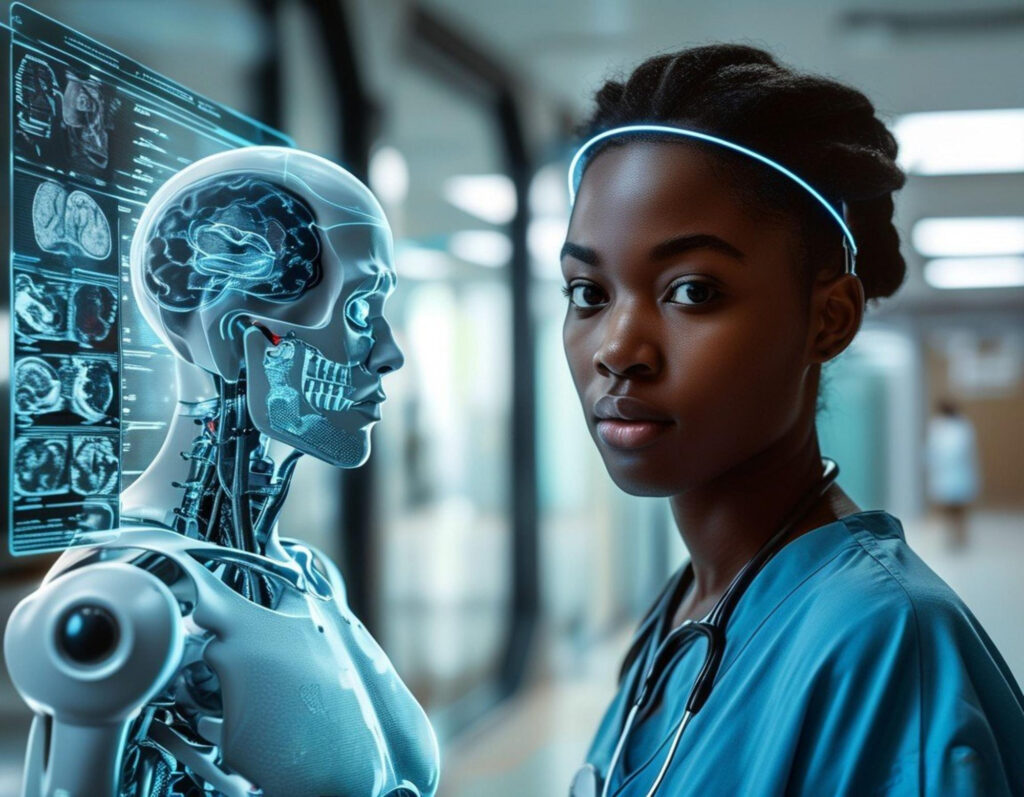

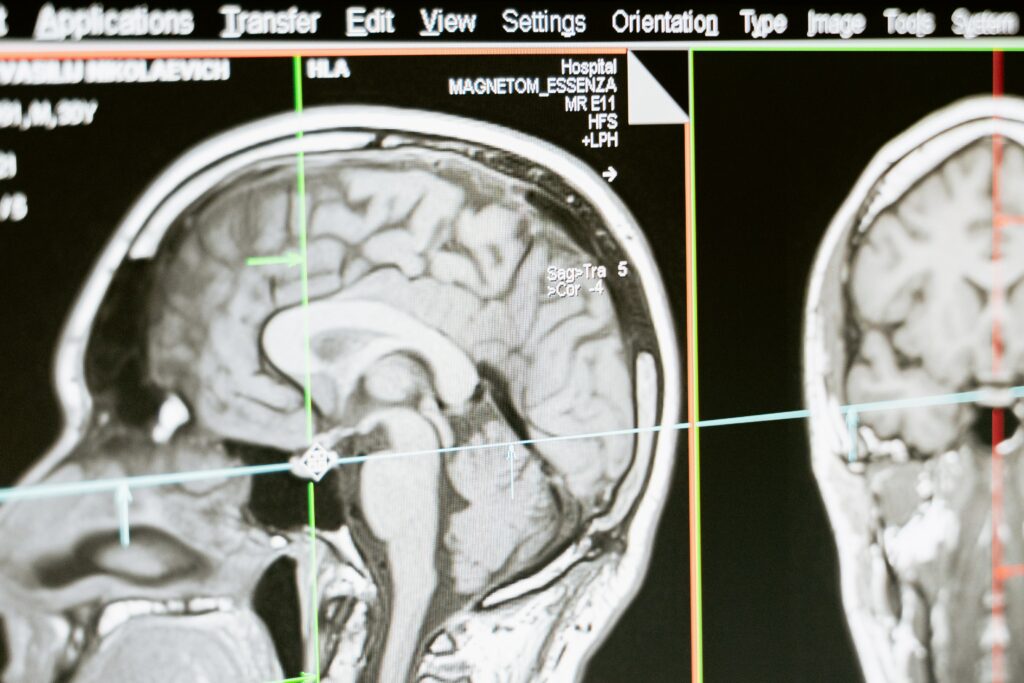

Hardware and Software Development: The Essential Alliance for Technological Innovation Hardware and software development are at the heart of the technological innovations that shape our world. These two fields, though distinct, are inseparable when it comes to creating high-performance, reliable technological solutions. Whether it’s smartphones, autonomous cars or medical devices, the harmonious integration of hardware and software is essential to deliver optimal user experiences and meet growing market needs. Interaction between hardware and software Hardware and software development must be seen as a collaborative process. Hardware is the physical foundation on which software runs, but without well-designed software, even the most advanced hardware cannot reach its full potential. Hardware engineers focus on designing robust components such as processors, motherboards and storage devices, while software developers create programs that exploit these components to perform specific tasks. Challenges in hardware and software development The joint development of hardware and software presents unique challenges. One of the main obstacles is compatibility. Software must be perfectly matched to hardware to avoid performance problems such as slowdowns or crashes. What’s more, updating hardware often requires updating software, which requires ongoing coordination between the two development teams. Another challenge is managing power consumption. Developers need to ensure that software optimizes the use of hardware resources to extend battery life in mobile devices, for example. This requires a thorough understanding of the inner workings of the hardware, as well as software optimization skills. The importance of customization in hardware and software development The current trend in hardware and software development is towards customization. Companies are increasingly looking to create tailor-made solutions that specifically meet the needs of their users. This means that software must be designed to take advantage of the hardware’s unique capabilities, offering a smoother, more intuitive user experience. For example, in video games, the development of specialized hardware, such as high-end graphics cards, is often accompanied by software optimized to take full advantage of these resources, delivering unrivalled performance. Similarly, in the medical sector, connected medical devices require software capable of rapidly processing and analyzing complex data, while running on reliable and accurate hardware. The future of hardware and software development The future of hardware and software development lies in continuous innovation and the integration of new technologies, such as artificial intelligence and the Internet of Things (IoT). These technologies call for even closer interactions between hardware and software, paving the way for intelligent devices capable of learning and adapting to user needs. The rise of cloud computing is another factor influencing hardware and software development. With the cloud, much of the processing can be offshored, enabling the design of devices with less powerful hardware, but which rely heavily on software to deliver high performance via remote servers. Conclusion Hardware and software development is a complex but essential discipline in today’s digital economy. The synergy between these two fields enables the creation of innovative products that not only meet, but exceed user expectations. As technology continues to evolve, close collaboration between hardware and software developers will remain crucial to the success of tomorrow’s technology projects. Visit our Blog page to see our recent publications. Subscribe to our Linked-In and Twitter pages for more news.